Development and creativity.

MY WORK

Startups and side projects

25Years of experience

40+Years of Experts on board

300+Finished projects

3Offices worldwide

In the last 17 years have been fortunate to work with several companies, such as startups and innovative software companies, where I have been able to contribute to the growth of their ideas.🎓 linkedin

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

{{ titolo1 }}{{ corpo1 }}

{{ titolo2 }}{{ corpo2 }}

{{ titolo3 }}{{ corpo3 }}

Scrittura Creativa

Dal 2022 sto sperimentando sessioni di apprendimento dedicate alla scrittura creativa. Il metodo consiste nello studiare i materiali dei formatori e dei testi e riportarli in video in una forma di ripasso.

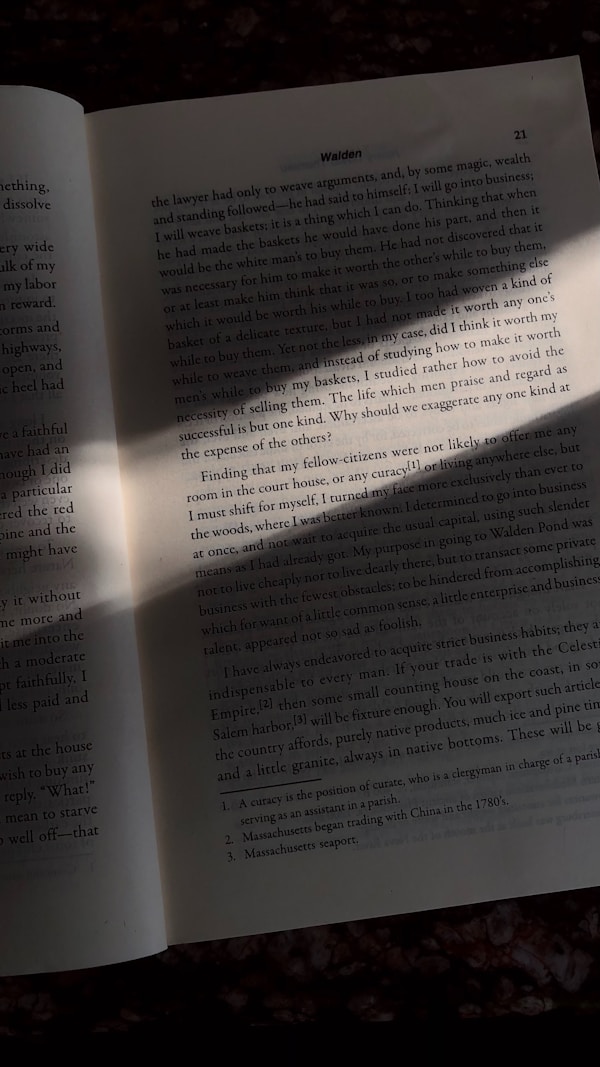

WRITINGOver the years I have written numerous articles for online newspapers and blogs. Now I curate simple in-depth articles on the topics that interest me, in Italian and English, on my gemlog.

gemini://chirale.org

There you can find a selection of these articles from the archive. Download Lagrange to explore Gemini (external website).

gemini://chirale.org

There you can find a selection of these articles from the archive. Download Lagrange to explore Gemini (external website).

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

§titolo1§corpo1

§titolo2§corpo2

§titolo3§corpo3

NOW ONWritingTechnology

Programming

PerspiciatisNemo voluptatemDolore magnamTemporaReprehenderit qui

Game Design

Creative Writing

PerspiciatisNemo voluptatemDolore magnamTemporaReprehenderit qui

Unleash Your Storytelling Potential

JournaKit is a set of tools developed especially for writers and communication specialists. It simplifies tedious tasks and enhances your creations..

JournaKit 🖊️Fueling the Growth of Startups and Tech Companies

FOTO

PerspiciatisLorem ipsum dolor sit amet consectetur adipiscing.

Nemo voluptatemLorem ipsum dolor sit amet consectetur adipiscing.

Dolore magnamLorem ipsum dolor sit amet consectetur adipiscing.

TemporaLorem ipsum dolor sit amet consectetur adipiscing.

Reprehenderit quiLorem ipsum dolor sit amet consectetur adipiscing.

Ullamco laborisLorem ipsum dolor sit amet consectetur adipiscing.

What our clients say

——